After 17 years working in the media I have returned to academia and been shocked to discover so much poor research practice – with all its consequences. Following problems back to their roots, it seems much is driven by the scientific publication system. It is a commercial system akin to journalism: the pressure is to create stories that others want to read. Yet good research and good stories are two entirely different things and so it is not the way that academics should be sharing their primary work and having it assessed.

Octopus, then, is my idea of what a system would look like that was geared purely towards motivating and rewarding good research practices.

Firstly, of course, Octopus is an entirely Open platform, where all primary research would be published and readable for free. Using automatic language translation, everyone reads and writes in their own native language seamlessly. However, this improved accessibility and searchability is not what helps drive good research practices.

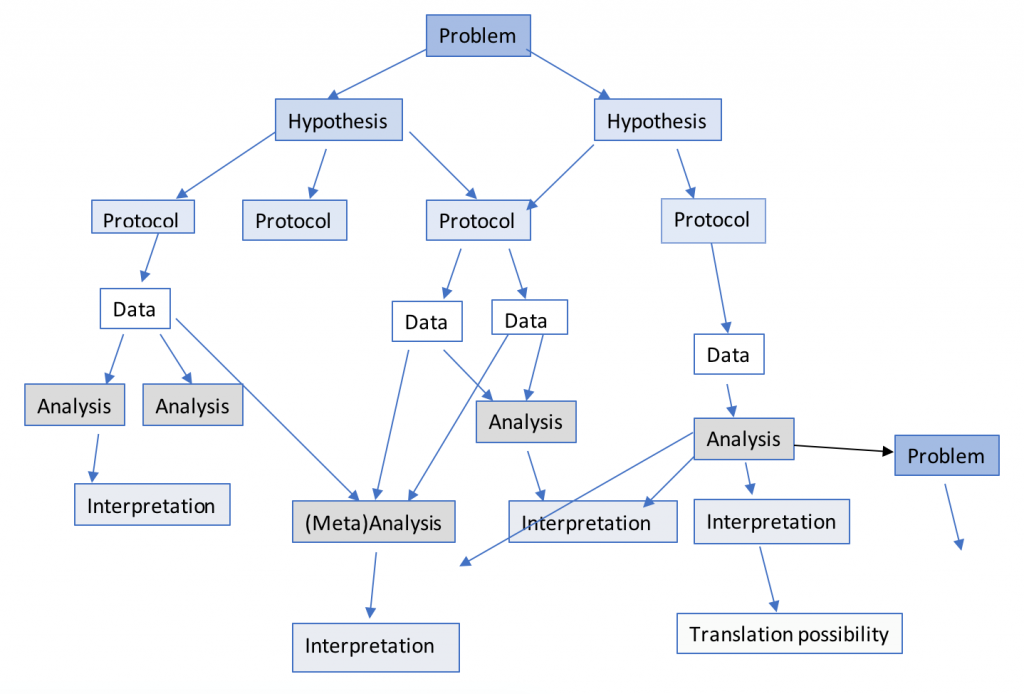

Key to Octopus is that the unit of publication is no longer ‘the paper’. Instead, there are 8 different types of publication. 7 of these break down the scientific process: from defining a problem, through coming up with hypotheses, methods to test those, results that have been collected according to those methods,analyses of those results, interpretations of those analyses, to the real-world implications of the findings. The 8th publication type is a review.

The first 7 form a chain. Apart from defining a new scientific problem, all have to be linked to one of the publications of the type above them (e.g. results have to be published linked to a method). Reviews are linked ‘horizontally’ to any other publication type.

Figure 1: The principle behind Octopus is to break the ‘unit of publication’ down from being a ‘paper’ to its constituent parts.

Breaking up the scientific chain explicitly like this has many consequences. Firstly, the removal of the need to ‘tell a story’. A good hypothesis can be published immediately and judged on its own merits without the pressure to provide data supporting it. Carefully collected results can be published regardless of how small the dataset or the implications of the results and so on. Now work can be judged on its true quality and merits.

Secondly, it allows greater accountability and meritocracy. Smaller publication units means smaller author groups and the right people are being credited for each specific piece of work. And that credit can be carefully defined: Octopus includes a rating system as well as reviews. Any user can rate a publication on pre-defined criteria. For instance, the three criteria that methods are rated on might be ‘Clearly written’, ‘Original’, and ‘Valid test of hypothesis’. Results might be rated on ‘Well annotated’, ‘Size of dataset’, and ‘Followed protocol’. Through rating work on these true markers of quality, Octopus can drive good practice.

Since publication establishes precedence, there is a benefit to researchers publishing each stage of their work as soon as they can. A tag would show which publications were written before completion of the ‘next step in the chain’ and which are post-hoc publications, encouraging a different – and better – way of working. No longer are the conclusions key – it is the quality of that process work that is important, not the outcome.

You can read more about Octopus at sciencepublishing.online. Having won a small award from the Royal Society in London I am currently working with designers to create mock-ups of the site whilst I search for funding to hire a software engineer to help build it. Octopus will be Open Source and designed in a modular way so that everyone can help improve it. It’s technically not a big challenge to build, so I hope to be able to start the revolution in the next 12 months!

Dr Alexandra Freeman

Executive Director

Winton Centre for Risk & Evidence Communication,

Centre for Mathematical Sciences,

Wilberforce Road,

Cambridge CB3 0WA

UK

af621@cam.ac.uk

0 Comments

Leave A Comment