This is indeed the central theme of the current newsletter and this has been the central theme in our discussions with numerous stakeholders over the past 10 years.

We need evidence that quality matters (do you remember this video?). There are of course several excellent meta-research publications (e.g., here), but these are either not widely known or not convincing enough for some of our peers. So, we need more education and more examples.

Invited by the VICT3R consortium, we tried to put together some of the best arguments we have and shared an example that is of particular consortium for this collaboration project (slides are here).

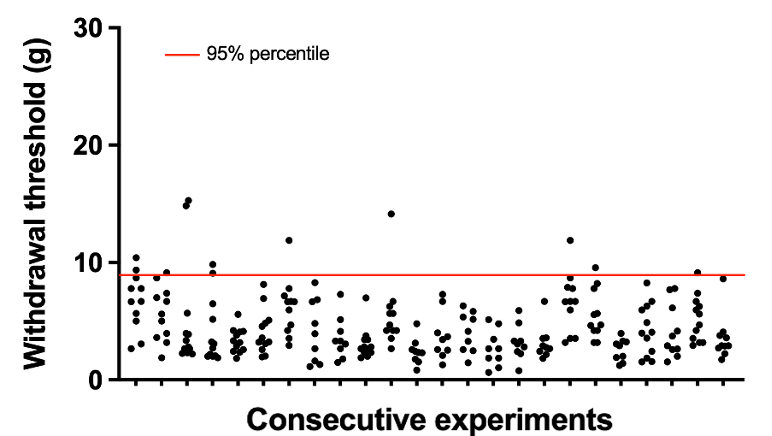

In short, some time ago we looked at the experiments performed over a period of 6 years using a chronic constriction injury model of chronic pain. In every experiment, there was a control group treated acutely with saline or other vehicles (po, sc or ip) instead of a test drug. Hence, there was a legitimate interest to use “historical” data instead of including a control group to every experiment. This intention seemed to be supported by a stable performance of the control groups in the past (each point represent an individual subject):

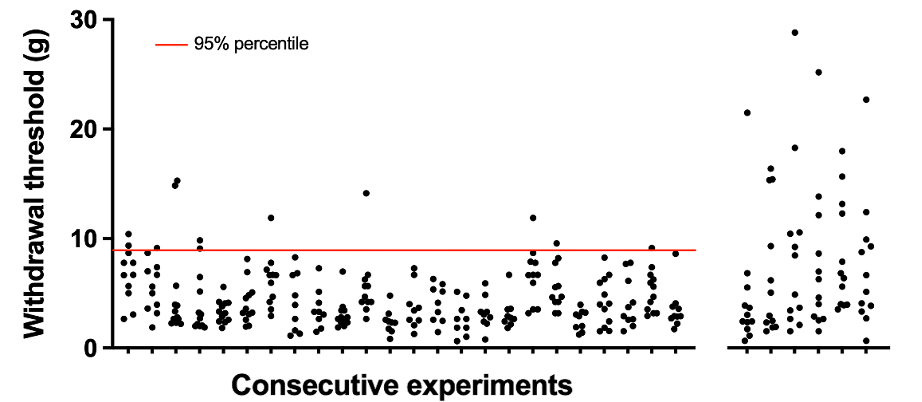

As the experiments were conducted unblinded and treatment group allocation was not concealed, we advised to run a few experiments with a control group included before making a decision.

Well, blinding clearly affected performance in the control groups:

What would be the conclusion? Avoid blinding because it worsens performance in the control group, leads to larger sample sizes and prevents the original idea from being implemented? Or, apply blinding to increase trustworthiness of the results even though it involves more work and costs more money?

0 Comments

Leave A Comment