Once during our vacation in Greece, our children asked for ice cream after having dinner. When the waiter was asked about ice cream, he ran to the neighboring ice cream stand and bought ice cream for us. Yes, there was no ice cream on the menu but the customer must be satisfied (and we indeed were)! I thought about this story while reading a recent paper on using the Rigor and Transparency Index (RTI) to analyze scientific reporting quality across a large number of institutions globally (LINK). To make it clear upfront, the RTI tool is excellent, we support its use as well as many other great initiatives and projects of the SciCrunch team (LINK). In other words, our commentary is not about the RTI tool itself but rather about the phenomenon that it may help reveal and characterize.

We have previously written about the issue of normative responding (LINK, LINK) and feel very strong about the importance of complementing journals’ efforts to improve quality of reporting by revising guidance for authors with additional measures. These measures could be a wider adoption of preregistration (and registered reports publishing format), more training and educational campaigns as well as spot checks on selected publications (before the manuscript are accepted) to see whether reported research rigor measures were indeed correctly understood and implemented. Albeit not perfect, this may be done, for example, by requesting copies of study plans / protocols or sets of raw data.

The problem of normative responding is real even though we have mostly anecdotal evidence for that. For example, in a recent conversation with an editor at a major pharmacological journal, we learned about instances when, after being told that n=3 is not enough and a minimum of n=5 is expected to use significance testing to claim effects, manuscript authors agreed readily and resubmitted the manuscript two weeks later with all the n=3s becoming n=5s.

If normative responding is not controlled while more and more attention is given to reporting of rigor measures in publications, we may face an interesting situation:Authors and institutions that do not hesitate to check “rigor” boxes will score high even if the indicated measures are not fully understood and adequately implemented (yes, we are rather skeptical that there will be too many publications admitting that the studies were designed with insufficient rigor).

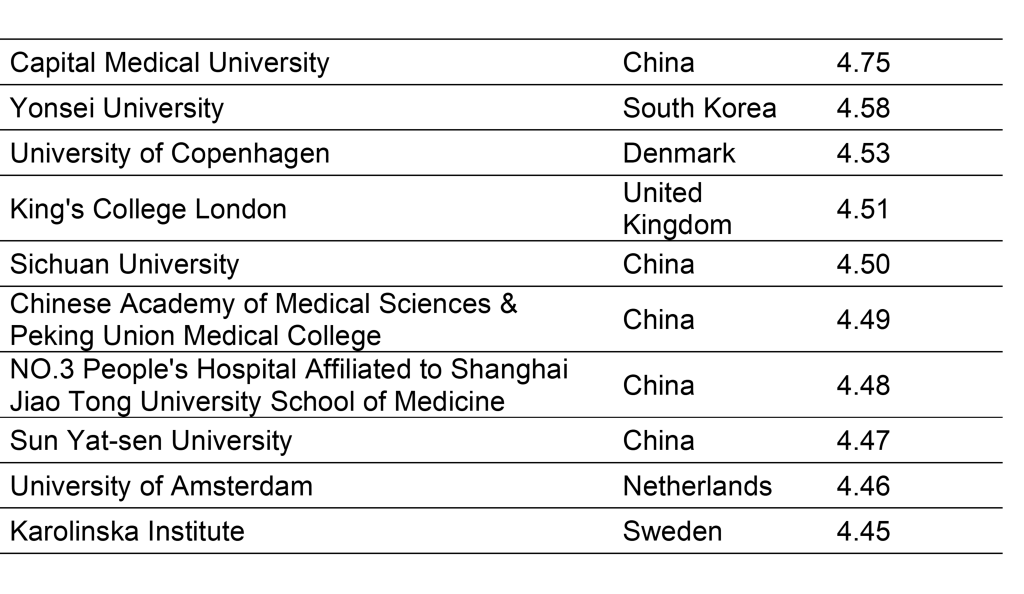

Among institutions publishing >1000 papers in 2020 and appearing in the PubMed Central open access set, Menke and colleagues (LINK) have reported the following universities to be the top 10 performers in terms of the RTI score:

Would you have expected to see such a list of top performers? We sincerely hope that the performance in this case is not biased by scientists and institutions confusing the true customer (“scientific progress”) with the wrong one (“publish or perish”).

0 Comments

Leave A Comment