Dear Colleagues and Friends,

Welcome to the PAASP / EQIPD newsletter issue no. 49! This issue includes:

- News: Good Research Practice online course

- Webinar: Financing of biomedical innovation: Learning to separate the wheat from the chaff

- Thinking aloud: Imagine …

- Upcoming symposium: Assessment of benefit of animal research

- Proposal: Intentional misconduct or too busy to oversee the lab?

- Update: EU-Funded Project at GoEQIPD is Progressing

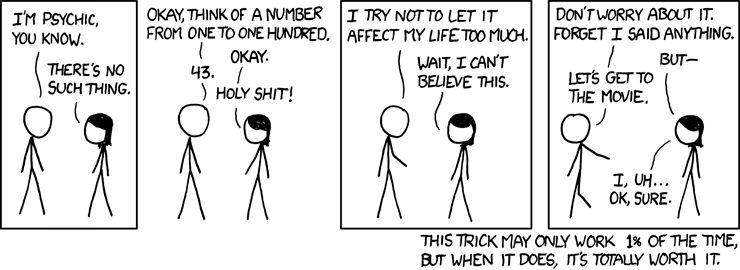

- May the laughter help us!

- Commentary: What if …

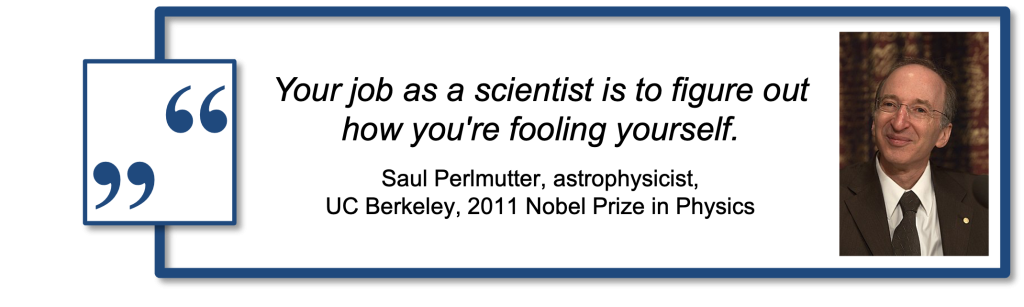

- Quote of the year

We wish all our readers, supporters, colleagues and friends a wonderful Holiday Season and a great start into the New Year!

Previous issues of our Newsletter can be found here.

If you have any questions or would like to discuss certain aspects in more detail, please do not hesitate to contact us at info@paasp.net!

Your EQIPD/PAASP team

Good Research Practice online course

We are approaching the end of this project that kept us very busy for almost two years. Our course is based on numerous courses, workshops and seminars given by us and our colleagues to young scientists live and online since 2017.

In Germany and other countries, requested by the learned societies, graduate schools or research collaboration consortia, we felt that these courses trigger genuine interest from young scientists who, unlike many of the more senior peers, do take good research practice as something essential that should not be postponed until other more important tasks are completed.

In Spring 2024, we released the course for beta-testing and were very happy to win support from several graduate schools in Europe and North America who invited their students to take the course.

After receiving feedback from 45 students across seven countries, we are now in the final steps of making updates to the course content as well as refreshing the video recordings.

The course is designed to introduce students, postdocs and other early-career researchers to best practices in experimental design, data management, reporting, and analysis – all with the overarching aim to make research outcomes more reliable and robust. The course features references to key articles, engaging videos, interactive quizzes, and concludes with a certification.

Please find an overview of the content on our website HERE.

We plan to launch the course in a few weeks. Stay tuned!

Webinar: Financing of biomedical innovation: Learning to separate the wheat from the chaff

The misconduct case of Eliezer Masliah highlights once again existing challenges in allocating capital to projects with the most robust and reliable supporting data. Sponsors of biomedical innovation appear not to know how to identify biased research.

Some areas of biomedical innovation may be particularly affected by investors betting on wrong horses. For example, we were struck by a post listing recent high-profile misconduct cases: notably, all are from the neurodegeneration / Alzheimer’s disease field and all are directly or indirectly connected with privately funded biotech companies.

The problem not only concerns wasted or misallocated capital as well as ethical concerns about research animals involved in all drug development projects, but also the safety risks to which human subjects are exposed when testing novel chemicals or biologics commences without sufficiently strong justification.

What makes the Alzheimer’s field so special? Is it the huge unmet medical need? Is it the resulting pressure to deliver? Is it related to the incredibly low probability of success when it comes to translating preclinical efficacy claims into therapeutic benefit for people with Alzheimer’s disease?

Without any doubt, there are many factors involved and there is no simple recipe. What can we do to help steering the biotech funding away from low-rigor research towards projects that truly deserve to be supported.

On January 31, 2025, at 16:00 CET / 10 am EST, we will give a webinar to provide practical recommendations for sponsors and funders of applied research on how to ensure robustness and reliability of the research output.

Key topics include identifying critical or decision-enabling evidence, pre-specification of study hypotheses and measures to prevent or minimise the risk of bias. We will also discuss what to do if decision-enabling studies were not performed in ‘confirmatory’ mode. Additionally, the webinar will explore best practices for data presentation, including the appropriate use of bar graphs, usage of effect sizes vs p-values, and image beautification practices.

The webinar is organized by the ECNP Preclinical Data Forum Network, co-hosted by GoEQIPD, CAMARADES and PAASP. Here is the registration link:

We ask you to help disseminating information about this webinar (main audience: private investors and venture capital, licensing and acquisitions teams, due diligence teams, technology transfer offices, and other groups involved in financing of early / nonclinical stages of biomedical innovation).

Imagine …

… you go to a supermarket or a grocery store, buy everything you need, pay at the cashier’s but leave all your purchase in the store, come home, save the receipt for the end-of-the-month accounting and move on as if this is how it should be.

In 2017-2021, PAASP was part of the EQIPD consortium funded by the European Horizon 2020 program. At the end of 2023, two years after the project ended, we (PAASP) were informed about a finance audit to be performed by PricewaterhouseCoopers. To cut the long story short, this turned out to be a very lengthy and detailed process culminating in two PwC colleagues traveling from Romania (!) to Heidelberg to go through our books over four consecutive days. Several months after this visit, the final report was accepted by the European Commission. The outcome was positive but it gave us a lot of food for thought.

The funder must be really concerned that its money is spent properly (i.e., all expenses are correctly booked and documented) and does not mind spending significant amounts of money on such audits. This is understandable and PAASP is certainly not the only organization that is subjected to such assessments.

However, we struggle with the question: Why do funders not check whether there was indeed a meaningful output generated from what they funded? Why do we not hear about funders checking that the funded research actually left traces in a form of experimental records containing data documented according to preferred practices?

Upcoming symposium: Assessment of benefit of animal research

It is generally recognized that, to produce robust and reliable data, research must be protected against bias. There are various measures introduced, from training programs to reporting guidelines, to make sure that “exploratory” and “confirmatory” research output (Dirnagl 2020) can be recognized on the basis of information provided in scientific publications and other reports.

However, the overall speed of introducing these changes remains to be slow and, even according to recent analyses, reporting of sample size estimation, randomization and blinding in preclinical research publications is still disappointingly low (Kousholt et al 2022). Such analyses illustrate two related challenges.

On the one hand, biased research is believed to be more likely to produce unreliable (“non-reproducible”) data and this is one of the arguments used by those who oppose the use of laboratory animals in biomedical research (we have repeatedly written about it – here, here and here).

On the other hand, the above cited publication summarized reporting practices by scientists affiliated with Danish universities. In the EU, the Directive 2010/63/EU explicitly mandates the conduct of a Harm-Benefit Analysis (HBA) of proposed animal research by Competent Authorities. HBA involves the evaluation of potential harms inflicted upon research animals against any potential benefits derived from the research. In practice, however, there is no alignment on how the benefit of the proposed research should be assessed.

To meet the need for a practical and approachable method for assessing research benefit, the HBA International Working Group was convened in January 2023. The working group consisted of nine participants representing a balance of diverse expertise in research, industry, and academia and included a PAASP team member. Two existing HBA frameworks were taken as starting points – one developed by AALAS-FELASA (Laber et al 2016) and the other implemented in Switzerland (Swiss Academies of Arts and Sciences 2022).

The working group constructed a Benefit Assessment Matrix (BAM) that is based on: (1) identification and definition of key factors defining proposed research benefit, (2) identification and definition of modifying factors (i.e., factors related to internal validity and technical feasibility that enable the proposed benefit to translate into qualified benefit); and (3) simple metrics for the user to determine if the criteria for each item has been met.

While the BAM tool does not represent or replace an assessment by Ethics Committees, it is expected that Ethics Committees or equivalent bodies will use it to recognize high-rigor research proposals (and, if needed, grant them a priority review) as well as to identify arguments to challenge the applicants regarding the benefit of proposed studies.

Further, the BAM tool can support scientists willing to highlight the benefit of the proposed studies as well as the implemented rigor measures to achieve the benefit objectives.

The Working Group organized a symposium entitled “Harm-Benefit Analysis: A Tool for the Assessment of Benefit” at the next FELASA congress (LINK) to present the BAM framework and seek further input. Readers of this newsletter interested in learning about BAM details and willing to provide feedback and pilot test the BAM are invited to contact the group via email (info@paasp.net).

Intentional misconduct or too busy to oversee the lab?

The recent scientific misconduct in the laboratories of Eliezer Masliah is not the first such case and will unfortunately most likely not be the last one. From what was publicly disclosed, it is not clear to what extent (if at all) Dr. Masliah himself was aware of the misconduct and whether he directly contributed to it.

However, it is very obvious that prominent scientists like Dr. Masliah have many activities to attend to (meetings, travel, writing, administrative duties, etc.). As a result, there is often little time left to oversee what is actually going on in the laboratory. If, on top of that, laboratory members are under extreme pressure to deliver results that are publishable (e.g., publication requirements for PhD students) and are aligned with the senior colleagues’ hypotheses, the risks of generating biased research outputs can be enormously high.

We propose a simple “risk factor” checklist (below) that can be easily administered by senior scientists themselves as well as the research institution officials to recognize such threats and to initiate pre-emptive protection measures.

| Significant pressure that may bias the research output | High publication activity (e.g., ≥10 papers per year as first and/or last author – here and below, quantitative thresholds are to be defined as, for example, the upper quartile of the overall distribution) Performance / evaluation criteria based on number and/or impact-factor of publications (for anyone in the laboratory from PhD students to PIs to) Above-average funding support |

| Laboratory actively engaged in commercialization of the research output | Industry contracts (fee-for-service, sponsored research collaboration) Technology transfer activities (e.g., licensing activity, patent applications) |

| PI lacks time to regularly oversee activities in the laboratory | Frequent travel (conferences, etc.) (e.g., cumulatively ≥ 2 months per year) Large labs (e.g., ≥ 6 members directly reporting to the PI) Extensive collaboration networks inside and outside the institution (e.g., ≥10 external co-authors on papers in the last 2 years) |

| PI lacks resources to regularly oversee activities in the lab | No electronic laboratory notebook No error management system (i.e., a system that is used to capture, report and follow up on accidents and errors) No spot checks on raw data and key research processes performed regularly by the PI and/or dedicated laboratory manager |

| Laboratory members receive no training to protect against risks of bias | No access to and/or no training about internal institutional research integrity policies (office, ombudsman, trusted person, anonymous reporting procedure, etc.) No standard onboarding / training of new students and postdocs No Good Research Practice training provided routinely to junior scientists |

We suggest that the presence of a certain threshold number of risk factors should motivate the PI to run spot checks on randomly selected research output by any lab member to see if (s)he may potentially face similar challenges that seem to cost Dr. Masliah his career.

We invite readers of this newsletter to work with us to refine the draft checklist and to develop a strategy for its broad dissemination and implementation.

Update: EU-Funded Project at GoEQIPD is Progressing

The Guarantors of EQIPD are involved in the EU-funded iRISE project (Improving Reproducibility in Science) and are conducting two studies on the EQIPD Quality System.

The first study focuses on EQIPD-certified research units, where interviews and surveys will assess the impact of the quality system.

The second study involves a survey within the broader research community. This survey is still open and can be shared with researchers who have not yet participated (Click HERE). As part of the iRISE project, we are supporting research units in implementing EQIPD and offering free certification assessments. After implementation, a follow-up survey will evaluate the practices in the certified research groups. Researchers can still join this study.

For more information, please contact Björn.

May the laughter help us!

Source: https://xkcd.com/628/

Commentary: What if …

Several years ago we discussed potential scenarios related to the outcome of Cassava’s Phase 3 program. To remind our readers, concerns were repeatedly expressed over the quality of studies conducted by Cassava Sciences with its lead drug simufilam (here here).

If these studies were to fail, we would have a telling example about the negative consequences of deviating from good research practices. However, a more difficult outcome scenario would be if these studies succeeded (despite the data quality issues). Then all our wisdom about research rigor would be worth nothing since the winner is always right.

As recently announced, Cassava studies failed and we have now rather ambivalent feelings.

On the one hand, we have to admit that it almost feels like a relief because drug development should not be a roulette.

On the other hand, this is bad news for the patients whose expectations were betrayed by whoever made the decision to fund Cassava’s program (and thereby depriving better deserved projects from getting funded).

Cassava may not be a unique case. Another example is CervoMed (former EIP Pharma) that we have been following for several years and that recently disclosed negative results from yet another clinical RCT.

The key publication describing preclinical profile of their lead asset was flagged on PubPeer and also caught our attention for several reasons such as, e.g.:

- Data presented in Figure 2D do not match those provided in the source data file. There were apparently some data excluded from analysis without being mentioned (and, interestingly enough, subjecting the provided dataset to GraphPad Prism replicates ANOVA results but not the P value);

- Regarding the sample size and number of animals used for each study, the Reporting Summary states that ‘no statistical methods were used to predetermine sample sizes, but our sample sizes are choices according to those reported in previous publications’.

All of the above might be acceptable for exploratory research but we are convinced that clinical trials should be initiated based on much more rigorous evidence (hopefully, readers of this newsletter share our view).

Did CervoMed have better quality data that were left unpublished? We do not know because, for IP or other business reasons, companies often delay or avoid publication of data.

This lack of transparency reveals another category of stakeholders getting disappointed by the news from Cassava, CervoMed and others – those who buy shares of these companies. As previously discussed, a large proportion of biotech companies are publicly traded without having any asset beyond clinical proof-of-concept.

What makes people invest into stocks of such companies? Prior achievements of the management team? Backing by key opinion leaders? Or skillful presentation of exploratory data as decision-enabling?

Or, perhaps, is it the fear of missing an opportunity? A colleague recently wrote to us explaining that, “… in a financial sense, it would be more “risky” to short-sell small cap biotech stocks than to own small cap biotech stocks. That is because if you own a stock, you can lose no more than 100% of your investment. If you are short a stock, and it goes up from 40 cents a share to $4 a share, you have lost 1000%.”

Indeed, what if one does take concerns about research rigor serious but Cassavas and CervoMeds still succeed?

Quote of the year